Master the red green refactor TDD cycle with this practical guide. Learn the workflow, explore real-world examples, and build higher-quality, maintainable code.

November 29, 2025 (2mo ago)

A Guide to Red Green Refactor TDD

Master the red green refactor TDD cycle with this practical guide. Learn the workflow, explore real-world examples, and build higher-quality, maintainable code.

← Back to blog

Red-Green-Refactor TDD: Practical Guide

Summary: Master the Red-Green-Refactor TDD cycle with practical workflow, examples, and business benefits to build cleaner, maintainable code.

Introduction

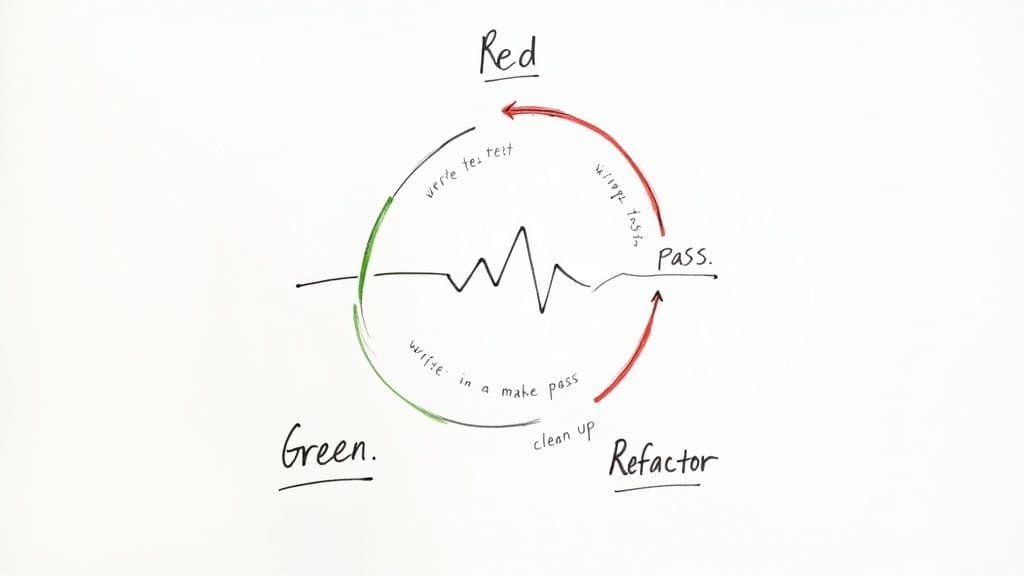

The Red‑Green‑Refactor Test‑Driven Development (TDD) cycle is a simple, disciplined workflow that helps teams design and ship reliable software. Start with a failing test (Red), write the minimum code to pass it (Green), then clean up the implementation (Refactor). Repeating this loop turns uncertainty into small, verifiable steps that improve quality and reduce risk.

The Rhythm of Test‑Driven Development

Many developers assume TDD is only about testing, but it’s primarily a design practice. Writing the test first forces you to think about how the code will be used before implementation, flipping the usual development flow. This approach reduces guesswork and encourages small, deliberate progress. The Red‑Green‑Refactor cycle becomes the heartbeat of reliable development.

Understanding the Three Stages

Each stage has a focused purpose and keeps the work small and verifiable.

- Red phase (failing test): Write a single automated test that represents the smallest useful behaviour. The test fails because the implementation doesn’t exist yet. The failure proves the test is valid.

- Green phase (make it pass): Implement the smallest amount of code needed to satisfy the test. Prioritize simplicity over elegance to avoid over‑engineering.

- Refactor phase (improve the code): With tests passing as a safety net, clean up names, remove duplication, and improve structure without changing behaviour.

The refactor step is non‑negotiable. Skipping it accumulates technical debt and makes future changes harder.

Red‑Green‑Refactor at a Glance

| Phase | Purpose | Developer Goal |

|---|---|---|

| Red | Define the requirement and validate the test | Write one small test that fails |

| Green | Satisfy the requirement | Add the minimum code to make the test pass |

| Refactor | Improve internal quality | Clean up duplication and clarify intent |

Adopting this cadence helps teams move predictably and confidently. In some regions, many teams have already incorporated TDD into their practices; adoption patterns and outcomes vary by market and organization1.

Walking Through the TDD Cycle in Action

To see the cycle in practice, we’ll build a practical UI example: a LikeButton component using TypeScript, React, and Jest. This example shows how TDD guides design while keeping behaviour predictable.

Red phase: define the first requirement

The simplest requirement is: the component renders without crashing and displays “Like.” We write the test before the component.

// LikeButton.test.tsx

import React from 'react';

import { render, screen } from '@testing-library/react';

import LikeButton from './LikeButton';

describe('LikeButton', () => {

it('renders a button with the initial text "Like"', () => {

render(<LikeButton />);

const likeButton = screen.getByRole('button', { name: /like/i });

expect(likeButton).toBeInTheDocument();

});

});

Running the test fails because the component doesn’t exist yet. This is the Red phase—exactly what we want.

Green phase: just enough to pass

Create the minimal component to satisfy the test.

// LikeButton.tsx

import React from 'react';

const LikeButton = () => {

return <button>Like</button>;

};

export default LikeButton;

Run the tests again, and they pass. Mission accomplished for this cycle.

Refactor phase: polish the implementation

Now clean up the code. Add types and establish a pattern for future expansion.

// LikeButton.tsx (refactored)

import React, { FC } from 'react';

type LikeButtonProps = {};

const LikeButton: FC<LikeButtonProps> = () => {

return <button>Like</button>;

};

export default LikeButton;

Tests still pass. The safety net lets you improve the code with confidence.

Iteration example: clicking the button

New requirement: clicking the button changes its text to “Liked” and disables it to prevent multiple clicks. Start with a failing test.

// LikeButton.test.tsx

it('changes text to "Liked" and becomes disabled when clicked', () => {

render(<LikeButton />);

const likeButton = screen.getByRole('button', { name: /like/i });

fireEvent.click(likeButton);

expect(likeButton).toHaveTextContent('Liked');

expect(likeButton).toBeDisabled();

});

Implement the minimal behaviour to pass the test.

// LikeButton.tsx

import React, { FC, useState } from 'react';

type LikeButtonProps = {};

const LikeButton: FC<LikeButtonProps> = () => {

const [liked, setLiked] = useState(false);

const handleClick = () => setLiked(true);

return (

<button onClick={handleClick} disabled={liked}>

{liked ? 'Liked' : 'Like'}

</button>

);

};

export default LikeButton;

Run the suite, all green. Repeat: one small requirement at a time, protected by tests.

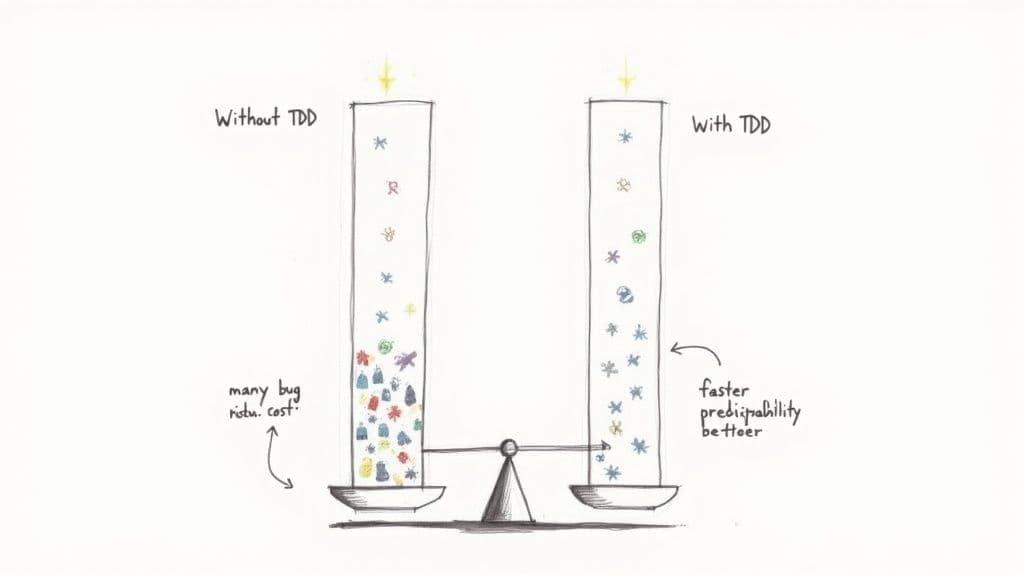

The Business Case for Code Quality

TDD’s engineering benefits quickly translate into business value. Fewer defects in production means lower support costs, less customer churn, and a stronger brand reputation. When defects are caught early, they’re cheaper to fix, and teams can spend more time building new, valuable features.

A disciplined TDD practice has been linked to measurable quality improvements and reduced debugging effort in empirical studies and industry reports2.

Reducing post‑release defects and maintenance costs

By writing tests before the code, you only add production code that is required to satisfy a test. This creates a robust safety net and reduces unexpected regressions. Several studies and industry reports document lower defect rates and less time spent on rework for teams that consistently apply TDD and automated testing practices2.

A focus on quality upfront lowers the total cost of ownership for software, because you avoid the compounding cost of technical debt over time.

Accelerating onboarding and improving predictability

A comprehensive test suite serves as executable documentation. New developers can run tests to learn the system’s expected behaviour instead of relying on outdated wikis. This shortens ramp time and reduces the burden on senior engineers.

Consistent TDD practices also improve predictability. Breaking work into many small, tested cycles makes estimates and progress tracking more reliable, which helps with planning and stakeholder communication3.

Common TDD Pitfalls and How to Avoid Them

TDD is simple to describe but subtle to master. The following anti‑patterns commonly undermine TDD’s benefits.

Integration tests in unit test clothing

Problem: A test ends up exercising many moving parts—the component, its services, API clients, and possibly a database. The test becomes slow, brittle, and noisy.

Fix: Test a single unit in isolation. Use mocks, stubs, and fakes for external dependencies. If you need integration coverage, write dedicated integration tests that run separately.

A true unit test shouldn’t touch the network, filesystem, or a real database. Its speed and reliability are what let you refactor fearlessly4.

Testing implementation instead of behaviour

Problem: Tests assert internal details instead of public behaviour. Tests break when you improve or refactor internals, even though behaviour remains correct.

Fix: Test the public API and observable effects. Ask, given this input, what is the expected output? Tests that verify behaviour resist unrelated refactors and remain valuable documentation.

Skipping the refactor step

Problem: Developers rush to the next feature after getting tests to pass, leaving messy implementations behind.

Fix: Treat refactor as a required step. With tests passing, small cleanups are safe and compound into a codebase that’s easy to change.

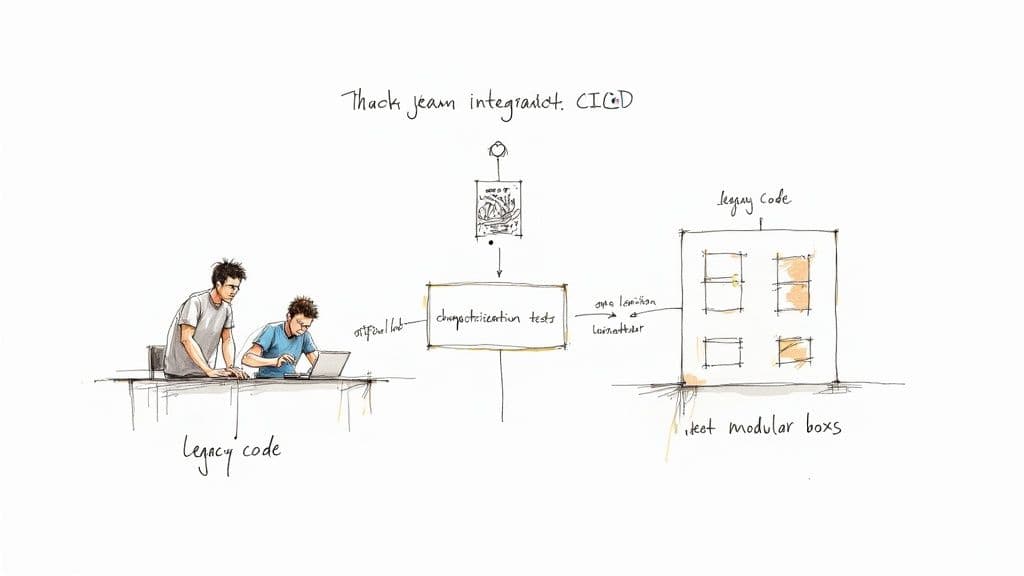

Integrating TDD into Your Team and Legacy Code

Adopting TDD is a cultural change as much as a technical one. Encourage hands‑on learning, make tests part of the team’s Definition of Done, and celebrate test‑driven wins.

Championing TDD within the team

Practical ways to build momentum:

- Pair programming, where an experienced developer guides a teammate through the Red‑Green‑Refactor cycle.

- Mob programming for tougher problems, rotating who drives to spread knowledge.

- Lunch‑and‑learn sessions that demonstrate real examples of TDD in your codebase.

Start small, and let early test catches become proof points for the team.

Taming legacy code with characterization tests

When code is untested and risky to change, write characterization tests to document current behaviour. These tests let you refactor or add features with confidence, by first asserting how the system behaves today.

Automating quality with CI/CD pipelines

Run the test suite on every commit in CI. This provides immediate feedback, enforces quality gates, and makes passing tests a mandatory step before merging. Automation keeps the test feedback loop fast and dependable.

Your Top TDD Questions, Answered

Does TDD replace other kinds of testing?

No. TDD focuses on unit tests as a design tool, but you still need integration tests and end‑to‑end tests to validate component interactions and full user flows.

How do I use TDD with databases or external APIs?

Isolate code from external dependencies by using mocks, stubs, or fakes. Test your logic in a bubble and reserve real integration tests for a separate test suite.

Is it worth testing simple UI components?

Yes, when you test behaviour, not implementation. Verify what users see and do, such as whether a button renders the right label or triggers the correct action when clicked.

Q&A — Common user questions

Q: How long before my team sees value from TDD?

A: Value appears quickly in reduced regression bugs and faster debugging. The pace depends on team size and discipline, but small, consistent wins often show up within a few sprints.

Q: What’s the smallest first step to adopt TDD?

A: Start with a single new feature or noncritical bug. Require a failing test before implementation and enforce the refactor step.

Q: How do I convince stakeholders to invest time in tests?

A: Present the long‑term cost savings: fewer production incidents, lower maintenance costs, and faster feature delivery over time. Use concrete incidents your team has faced as examples.

AI writes code.You make it last.

In the age of AI acceleration, clean code isn’t just good practice — it’s the difference between systems that scale and codebases that collapse under their own weight.